A computer program that analyzes eye movements is better at identifying deceitful people than expert human interrogators, say the University at Buffalo computer scientists who developed the software. Details of the work were presented at the 2011 IEEE Conference on Automatic Face and Gesture Recognition.

“What we wanted to understand was whether there are signal changes emitted by people when they are lying, and can machines detect them? The answer was yes, and yes,” said researcher Ifeoma Nwogu, an assistant professor at UB’s Center for Unified Biometrics and Sensors.

Nwogu worked with UB communication professor Mark G. Frank, a behavioral scientist whose primary area of research has been facial expressions and deception. In the past, Frank’s attempts to automate deceit detection have used systems that analyze changes in body heat or a range of involuntary facial expressions.

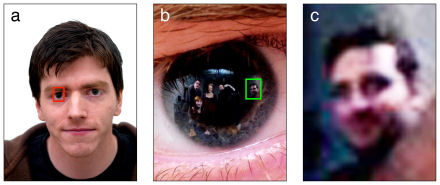

The new system scrutinizes a different trait – eye movement. The software employs a statistical technique to model how people moved their eyes in two distinct situations: during regular conversation, and while fielding a question designed to prompt a lie. People whose pattern of eye movements changed between the first and second scenario were assumed to be lying, while those who maintained consistent eye movement were assumed to be telling the truth.

Previous experiments in which human experts coded facial movements found significant differences in eye contact at times when subjects told a high-stakes lie. Utilizing these differentials, Nwogu set out to create an automated system that could improve upon human accuracy in revealing liars.

The researchers used the non-critical beginning of each video to establish what baseline eye movement looked like for each subject, focusing on the rate of blinking and the frequency with which people shifted their direction of gaze.

They then used their automated system to compare each subject’s baseline eye movements with eye movements during the critical section of each interrogation – the point at which interrogators stopped asking mundane questions and began inquiring designed to produce lying. If the machine detected unusual variations from baseline eye movements at this time, the researchers predicted the subject was lying.

According to Nwogu, the results suggest that computers are able to learn enough about a person’s behavior in a short time to perform a lie/truth judgment task that challenged even experienced interrogators. Specifically, the automated system correctly identified liars 82 percent of the time. Nwogu said experienced interrogators average closer to 65 percent.

She warned, however, that the technology is not foolproof: A very small percentage of subjects studied were excellent liars, maintaining their usual eye movement patterns as they lied. The next step, she added, will be to expand the number of subjects studied and develop automated systems that analyze body language in addition to eye contact.

Related:

Discuss this article in our forum

Study reveals how cops spot liars

How to spot Internet dating liars

Software identifies psychopaths

Mind-reading through the eyes

Comments are closed.