16 November 2009

Universal quantum processor demonstrated

by Kate Melville

Physicists at the National Institute of Standards and Technology (NIST) have demonstrated a "universal" programmable quantum information processor that uses two quantum bits of information to run any program allowed by quantum mechanics.

Physicists at the National Institute of Standards and Technology (NIST) have demonstrated a "universal" programmable quantum information processor that uses two quantum bits of information to run any program allowed by quantum mechanics.

The demonstration marks the first time any research group has moved beyond demonstrating individual tasks for a quantum processor to perform programmable processing, combining enough inputs and continuous steps to run any possible two-qubit program. "This is the first time anyone has demonstrated a programmable quantum processor for more than one qubit," said NIST postdoctoral researcher David Hanneke, the first author of the paper. "It's a step toward the big goal of doing calculations with lots and lots of qubits. The idea is you'd have lots of these processors, and you'd link them together."

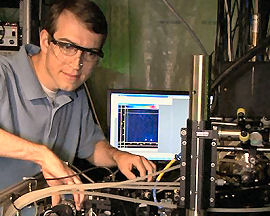

The new processor, described in Nature Physics, stores binary information in two beryllium ions which are held in an electromagnetic trap and manipulated with ultraviolet lasers. Two magnesium ions in the trap help cool the beryllium ions. The processor allows the states of each beryllium qubit to be placed in a "superposition" of both 1 and 0 values at the same time. Researchers can also "entangle" the two qubits, a quantum phenomenon that links the pair's properties even when the ions are physically separated.

The researchers performed 160 different processing routines on the two qubits. Although there are an infinite number of possible two-qubit programs, this set of 160 is large and diverse enough to fairly represent them, Hanneke contends, thus making the processor "universal." Key to the experimental design was use of a random number generator to select the particular routines that would be executed, so all possible programs had an equal chance of selection. This approach was chosen to avoid bias in testing the processor, in the event that some programs ran better or produced more accurate outputs than others.

In the experiments, each program consisted of 31 logic operations, 15 of which were varied in the programming process. A logic operation is a rule specifying a particular manipulation of one or two qubits. In traditional computers, these operations are written into software code and performed by hardware.

The programs executed did not perform easily described mathematical calculations. Rather, they involved various single-qubit "rotations" and two-qubit entanglements. As an example of a rotation, if a qubit is envisioned as a dot on a sphere at the north pole for 0, at the south pole for 1, or on the equator for a balanced superposition of 0 and 1, the dot might be rotated to a different point on the sphere, perhaps from the northern to the southern hemisphere, making it more of a 1 than a 0.

Each program operated accurately an average of 79 percent of the time across 900 runs, each run lasting about 37 milliseconds. To evaluate the processor and the quality of its operation, the scientists compared the measured outputs of the programs to idealized, theoretical results. They also performed extra measurements on 11 of the 160 programs, to more fully reconstruct how they ran and double-check the outputs.

Hanneke notes that many more qubits and logic operations will be required to solve large problems. And a significant challenge for future research will be reducing the errors that build up during successive operations. Program accuracy rates will need to be boosted substantially, both to achieve fault-tolerant computing and to reduce the computational "overhead" needed to correct errors after they occur, according to the paper.

Related:

Breakthrough in controlling superposition quantum states

Entanglement of photon detected across four locations

Quantum "Uncollapse" Muddies Definition Of Reality

Source: National Institute of Standards and Technology