Despite what sci-fi movies would have us believe, scientists cannot interact directly with the human brain because they don’t understand enough about how it codes and decodes information. But in a new study published in Science, neuroscientists at the McGovern Institute report that they have been able to read out a part of the visual system’s code involved in recognizing visual objects.

Deciphering the brain’s coding mechanisms is something that many believe is crucial to truly understanding the nature of intelligence. “We want to know how the brain works to create intelligence,” said McGovern researcher Tomaso Poggio. “Our ability to recognize objects in the visual world is among the most complex problems the brain must solve.

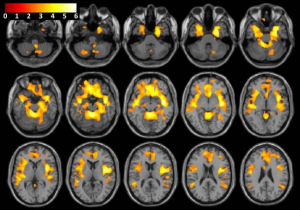

Poggio explained how, in a fraction of a second, visual input about an object runs from the retina through increasingly higher levels of the visual stream, continuously reformatting the information until it reaches the highest purely visual level, known as the inferotemporal cortex (IT). This part of the brain provides key information relating to identification and categorization to other brain regions, such as the prefrontal cortex.

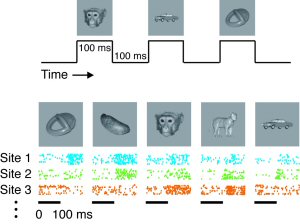

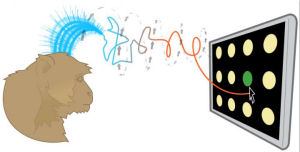

To further understand how the IT cortex represents that information, the researchers trained monkeys to recognize different objects grouped into categories, such as faces, toys, and vehicles. The images appeared in different sizes and positions in the visual field. Recording the activity of hundreds of IT neurons produced a database of IT neural patterns in response to each object.

The researchers then used a computer algorithm, which they called a “classifier” to decipher the codes. The classifier was first “trained” to associate each object – a monkey’s face, for example – with a particular pattern of neural signals. Once the classifier was sufficiently trained, it could be used to effectively decode new neural activity patterns. Astonishingly, the classifier found that even just a split second’s worth of the neural signal contained enough specific information to identify and categorize the object, even at positions and sizes the classifier had not previously “seen.”

Poggio said it was surprising that so few (only several hundred) IT neurons, for such a short period, contained so much precise information. “If we could record a larger population of neurons simultaneously, we might find even more robust codes hidden in the neural patterns and extract even fuller information.”

The research has many potential real world applications, such as artificial visual systems for security scanners or an automobile pedestrian alert system.

Comments are closed.